The 17th Speech in Noise Workshop (SPIN2026) took place on 12-13 January 2026 in Paris, France, in the buildings of the prestigious École Normale Supérieure.

Watch out, if you are a regular SPIN participant, this time the meeting was be on a Monday and Tuesday rather than the usual Thursday-Friday.

- SPIN2027 will take place in Brussels, Belgium!

- If you presented at SPIN, consider uploading your poster and sending a DOI. See details.

Many thanks to Clémence Basire, Azal Le Bagousse, Simon Major and David López-Ramos, who helped make SPIN2026 possible!

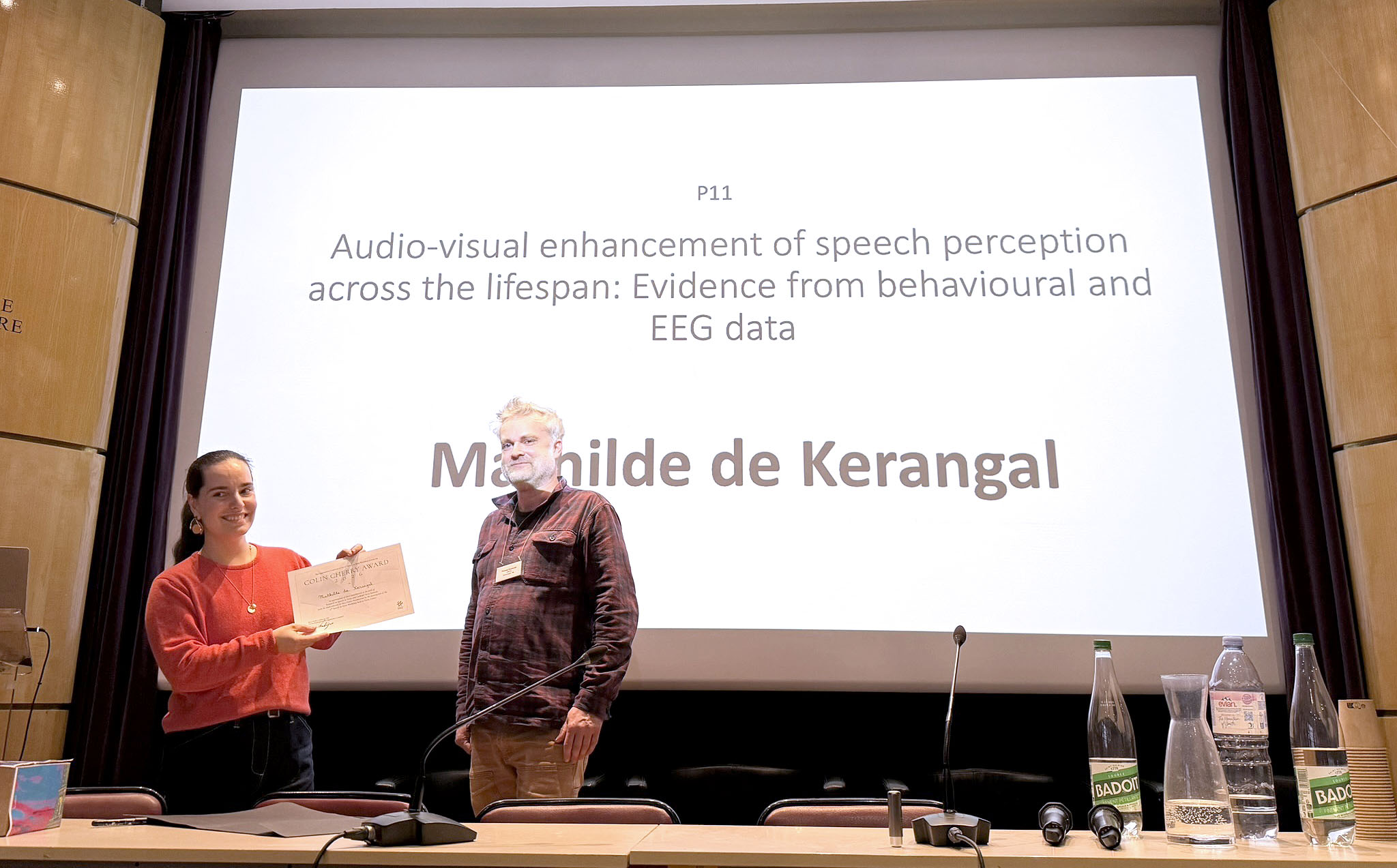

Colin Cherry Award 2026

The Colin Cherry Award 2026 was attributed to Mathilde de Kerangal for her poster "Audio-visual enhancement of speech perception across the lifespan: Evidence from behavioural and EEG data". Congratulations!

The Colin Cherry Award is attributed every year in appreciation of a contribution to the field of Research on Speech in Noise and Cocktail Party Sciences, with the work selected for best poster presentation by the participants of the Speech in Noise Workshop. The prize consists of a cocktail shaker, and the recipient receives an invitation to present their work at the following SPIN workshop.

Programme overview

Keynote Lecture:

“Examination of speech coding in the human auditory nerve using intracranial recordings”

Xavier Dubernard

Institut Otoneurochirurgical de Champagne Ardenne, CHU de Reims, France | Institut des Neurosciences de Montpellier, France

Invited speakers:

- Colin Cherry Award 2025

Jessica L. Pepper

Lancaster University, Lancaster, UK

Age-related changes in alpha activity during dual-task speech perception and balance - Emma Holmes

University College London, UK

How voice familiarity affects speech-in-speech perception - Miriam I. Marrufo-Perez

University of Salamanca, Spain

Adaptation to noise: A minireview of mechanisms and individual factors - Sven Mattys

University of York, UK

What does cognitive listening mean? Is it time for a rethink? - Eline Borch Petersen

ORCA Labs, Scientific Institute of WS Audiology, Lynge, Denmark

Miscommunications in triadic conversations: Effects of hearing loss, hearing aids, and background noise - Laura Rachman

University of Groningen / University Medical Center Groningen, Netherlands

Voice cue sensitivity and speech perception in speech maskers in children with hearing aids - Léo Varnet

Laboratoire des systèmes perceptifs, Département d’études cognitives, École normale supérieure, PSL University, CNRS, 75005 Paris, France

The microscopic impact of noise on phoneme perception and some implications for the nature of phonetic cues - Astrid van Wieringen

Experimental ORL, Dept Neurosciences, KU Leuven, Belgium

Cochlear implantation in children with single sided deafness - Katharina von Kriegstein

Dresden University of Technology, Germany

How do visual mechanisms help auditory-only speech and speaker recognition?